3 Priorities for AI Products

Three rules for building AI products.

There's something I've been trying to articulate recently about the right defaults and assumptions when building AI products. Almost all of this is indistinguishable from my time spent with Beyond Work over the last year, and particularly conversation with its AI Co-founder Malte (although that's not to say he would agree with everything I assert here!)

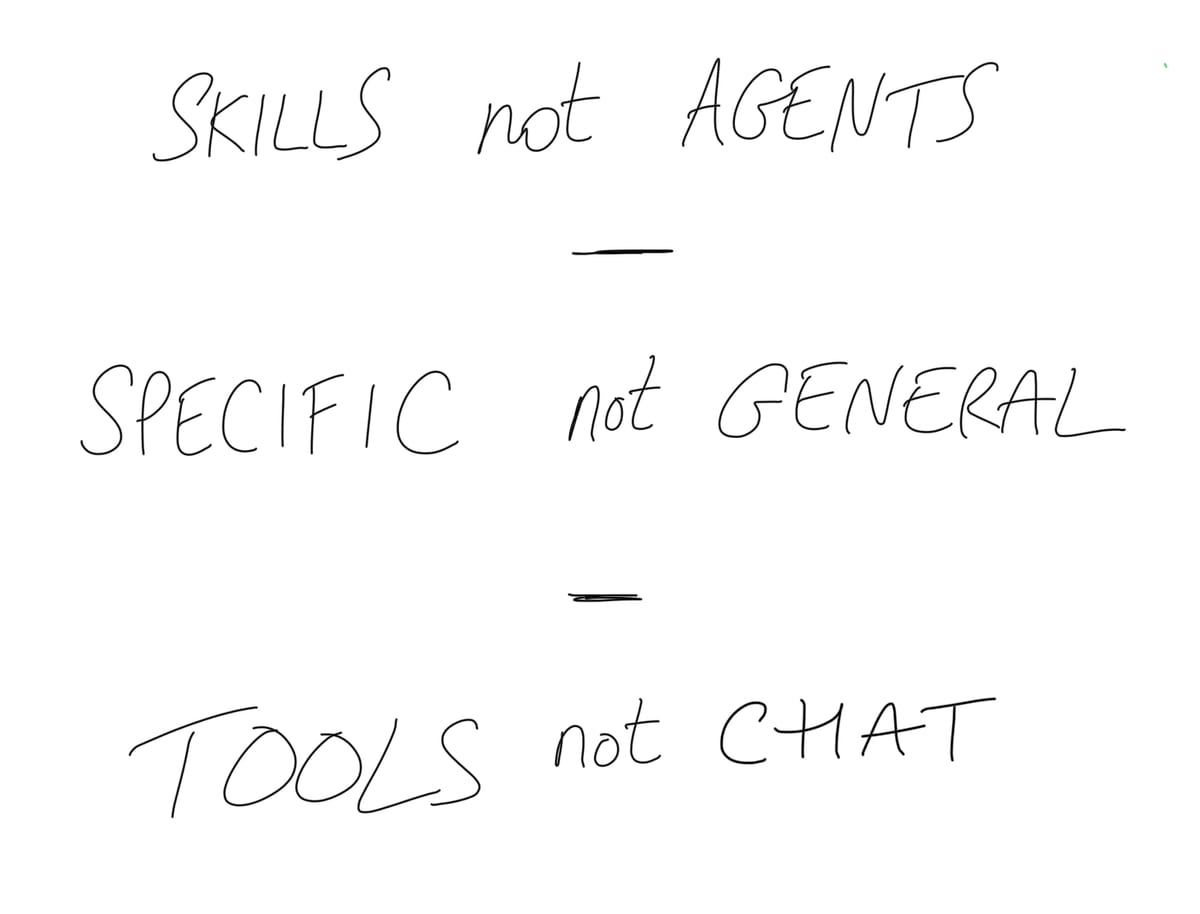

Last night I was scribbling notes at an event, thinking about what my frustrations are with the current discussions and demos I see around AI. And that led to the following three statements:

Agency, not Agents

(Edit: the eagle-eyed among you will notice I've tweaked this from Skills to Agency to better reflect something that has come from discussions about this post since.)

Time and time again, personification in tech has proven a shortcut to disappointment for users. LLMs have made a big impact on this, but the way they lie with confidence now breaks the spell in a new way. And once it's broken, it's hard to go back.

When people talk about the importance of Agents, I think what they mean is AgenCY. i.e. the product can take action for a user, rather than just talk to them.

So the question becomes, do you have a vast array of individual agents with their own personalities and ability as a way to express agency? Or do you have many fewer, that can exercise a skill or ability as and when they need to.

That brings us to:

Specific, not General

A lot of getting a good result from AI depends on setting a clear context. General tools like Claude and ChatGPT actually do a remarkably good job given you might be asking them about an almost unlimited number of subjects.

But most products do not need to deal with unlimited subjects or context. In fact, the more they can understand your intent and make it easy, the happier users are.

If you are building a product with AI, the more focused and specific you can be about the user's particular situation, intent and needs in the moment, the better. This is not new. But trying to provide a general chat interface instead of one made for the moment is the cause of a miserable user experience.

Which leads us to:

Tools, not Chat

This requires some balance – LLMs have impressed beyond traditional machine-learning in a big way because the interface has such an impact on the user.

And yet, if you think I'm going to use 10x tools a day that want me to write in longform to ask them to do things for me, I think you're mad.

Furthermore, if you think that the 9/10 people who are in fact terrible writers or communicators are going to do that, you aren't going to bring the potential of this technology to the people that it will very likely help most.

Just chucking chat in your product and thinking you've done something magic is to fundamentally neglect the most important responsibilities of product design.